Confidently Access Simple and Secure CloudDB (Mongo) in AWS

In this post, I tell you how I was able to connect to a CloudDB (AWS MongoDB equivalent) in a...

Make Me Happy: Easily Optimize AWS Organization with Terraform

Like the title says: it makes me happy to help others with things I’ve learned. I have a couple of...

Optimizing development to eliminate daily update of SSO variables

In my recent foray into code development, I found myself grappling with the intricacies of connecting to various AWS services....

Easy GoLang AWS SDK V2 Upgrade

In my day job I have a pretty extensive library of routines I use to wrap cloud automation using the...

AWS Accounts as Cattle

Manage infrastructure as cattle not pets and automating DNS migration between accounts

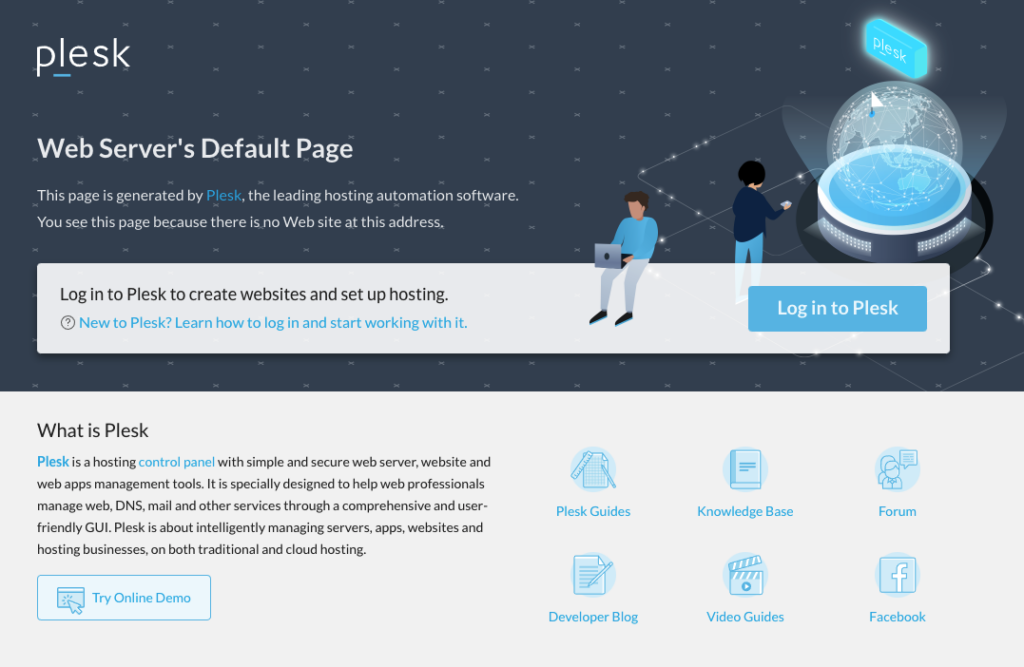

Plesk Migration to AWS

I have been running my hosting on 1and1 hosting (now Ionos) since the early days of the web. In the...

AWS Login Code As Infrastructure CloudFormation

I set up AWS accounts in a hub and spoke model for access using AWS Organizations for login. This meaning...

CloudFormation Custom Resources

CloudFormation is the Amazon Web Services (AWS) method of creating repeatable infrastructure as code. Technically templates that describe resources to...

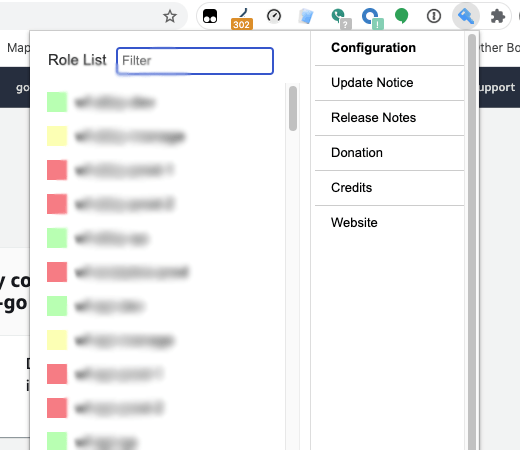

AWS Extend Switch Roles

I lear about a change in how AWS extend roles works, and how to use the extension in Chrome after that change. AWS Extend Switch Roles Update 2.0.3

AWS Cloudformation with Optional Resources

I had a situation where I had to bypass creation of some resources in CloudFormation. Either the resources already exist,...